Computer Vision Powers Autonomous Vehicles

To see the road, autonomous vehicles need smarts. Computer Vision in Autonomous Vehicles is the underlying structure of this advancement. It allowed vehicles to see, think, and act like human drivers. Cameras, sensors, and AI models collaborate in reading the driving environment. These systems operate on video in real-time settings and aid in safe-driving decisions. Without computer vision, self-driving systems wouldn’t know lanes, signs, and moving objects.

Computer Vision In Autonomous Vehicles Explained

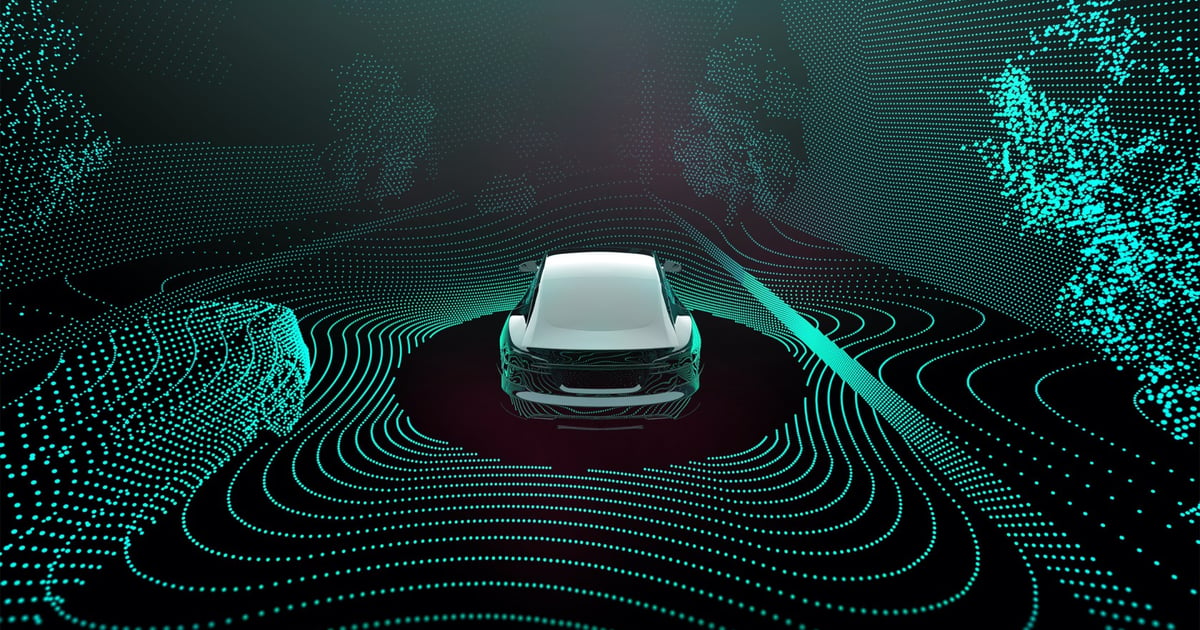

Computer vision enables vehicles to process images from cameras. These cameras take continuous pictures of the road. AI models then examine these images to discern patterns. This combats the perception problem by helping determine vehicles, pedestrians, signals, and road edges. It is a learning system that learns from large volumes of driving data. With practice, it enhances precision and speed of reaction. In this manner, vehicles are able to take decisive actions in evolving traffic environments.

Role Of Cameras In Scene Understanding By Vehicles

Cameras are the eyes of self-driving cars. They record images from every angle. Other features have front cameras that follow lanes and traffic flow. Side cameras monitor other vehicles and cyclists in the vicinity. Rear cameras support parking and reversing. The visual input is processed instantly by computer vision. It turns raw images into useful information. This is what allows the vehicle to realize where it is in the world at all times.

How A.I. Reads Visual Data

Deep learning algorithms process images from cameras in AI models. These models can learn about different shapes, hues, and even movements. They pick out traffic lights and road markings. AI also monitors the speed and direction of objects. This analysis happens within milliseconds. The system updates decisions continuously. The fast processing ensures vehicles are as agile and active on high-traffic roads.

Object Detection And Recognition

Object detection is an essential part of using your car safely. Computer vision can detect cars, people, animals, and obstacles. It can also identify traffic signs and signals. Each entity is assigned a priority level. The automobile reacts depending on the risk. If something all of a sudden appears in your path, you brake, and it tells you to steer. This would decrease the odds of collisions with improvements to road safety.

Lane Detection And Road Understanding

Lane detection ensures cars navigate safe paths. Cameras can detect road markings and edges. Computing in Autonomous Vehicles enables computer vision to detect lanes apart from other surfaces. It follows curves, shadows, and weathered paint. The system also projects lane direction ahead. This feature contributes to an easy way of steering and changing lanes. It also helps vehicles remain in the center of roads when visibility is poor.

Real-Time Road Intelligence While On The Go

The driverless future is about immediate decisions. Computer vision supplies AI systems with real-time data. The systems determine when to halt, turn, or speed up. The car has several things at once in the balance beam department. Traffic signals, pedestrians, and speed limits count too. Computer Vision in Self-Driving Cars makes fast and accurate decisions. This is the speed ensuring the accuracy and safety of autonomous driving.

Sensor Fusion For Enriching Vision Systems

Cameras work alongside other sensors. Computer vision is the fusion of visual input and depth. This process improves distance estimation. Assist in providing an accurate judgment of the distance between objects and vehicles. During rain or in the presence of fog, performance is enhanced by sensor fusion. Visual information is still the dominant source of information with regard to driving scenes. Taken together, sensors provide a complete picture of driving.

Learning From Driving Environments

With exposure, independent systems can learn. Computer vision models are trained on different types of roads. They are comfortable on city streets and highways. AI enhances pattern recognition as it sees through feedback loops. This learning gives you confidence after a while. Rare scenarios function better in the case of vehicles. Training promotes safer performance in multiple environments.

Safety And Ethical Considerations

Computer vision systems must pass rigorous testing. Engineers simulate complex driving situations. AI models are trained to make ethical decisions, highlighting the Role of Edge Computing in enabling real-time data processing and faster decision-making. The purpose of these systems is to afford protection for all road users. Correct perception limits human error. This emphasis gains the public’s trust in autonomy.

Computer Vision For The Self-Driving Cars And Beyond

Smarter vision systems are the mobility of the future. Computer Vision for Self-Driving Cars remains a fast-moving development. Cars will navigate heavy traffic with no problem. This work contributes to the safety and efficiency of transport on a global scale.

Conclusion

Just like humans, self-driving cars lean hard on vision. Computer Vision in Autonomous Vehicles drives cameras, AI, and real-time decisions. It enables your vehicle to perceive, think, and act in real-time. This innovation will minimize the number of accidents and increase the flow of traffic. As the systems develop, the roads will be safer for everyone.

Like and visit our Tumblr profile for more details and information

FAQs

Why do autonomous driving systems need cameras?

Localization and perception Components, such as cameras, deliver detailed visual data required for object detection.

Computer vision in wet weather?

Yes, more sophisticated models will need to account for some type of sensor fusion/continuous learning.

How quickly can AVs perceive visual data?

Then, they process images in only milliseconds, so we can make immediate driving decisions.

Can computer vision alone enable fully autonomous vehicles?

Computer vision is important, but it does best in concert with other sensors and AI systems.